Caching is a technique used for storing resource copies, which later allows the serving of such copy upon request without making a query to the server.

While talking about caching, it is common that it makes us think of it as something great and useful since it’s closely related to performance, since better performance provides better software solutions.

However, when it comes to implementation in itself, HTTP caching has been rarely configured properly, and the most common reasons for this are:

- Ignorance of the caching techniques

- Insufficient budget

- Not paying attention to the response time of a request. For example, if a page loads in 3-4s, this is deemed quite acceptable, but if the same page could be displayed in just 1s by using HTTP caching, there is no reason why we should not do such a thing.

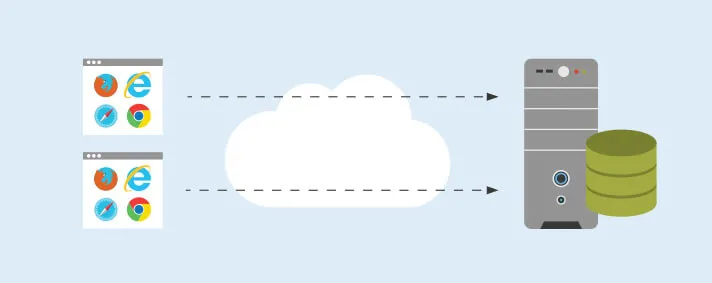

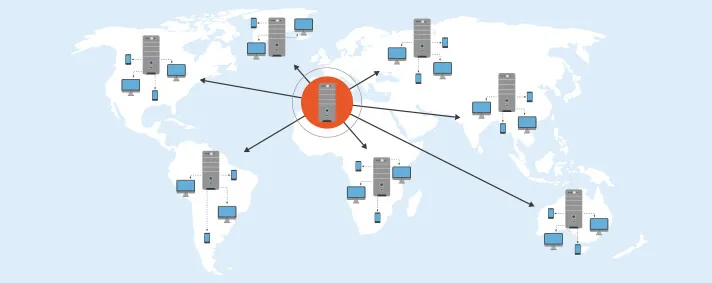

The image below represents a case with no caching, allowing you to see that all client requests are forwarded directly to the server.

Image 1 - No cache

There are several kinds of caches, but all of them may be categorized into two groups - private and shared caches.

Private cache

Private cache is dedicated to a single user. The browser stores resource copies previously obtained from the server in its local cache, located on the file system.

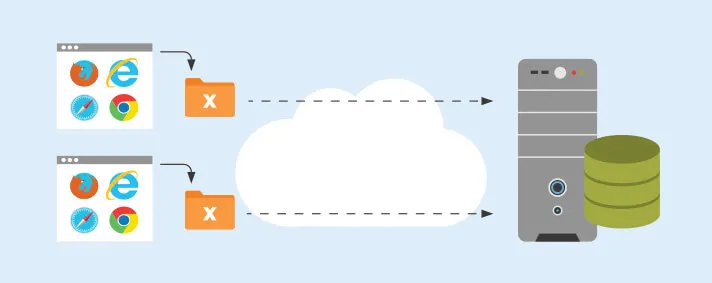

Upon the following request, the browser will first verify whether the local cache contains the resource and only then make a request to the server if there is no resource in the cache, like Image 2 shows. This cache is also used for back/forward navigation in browsers, view as a source options, viewing offline content etc.

Image 2 - Private cache

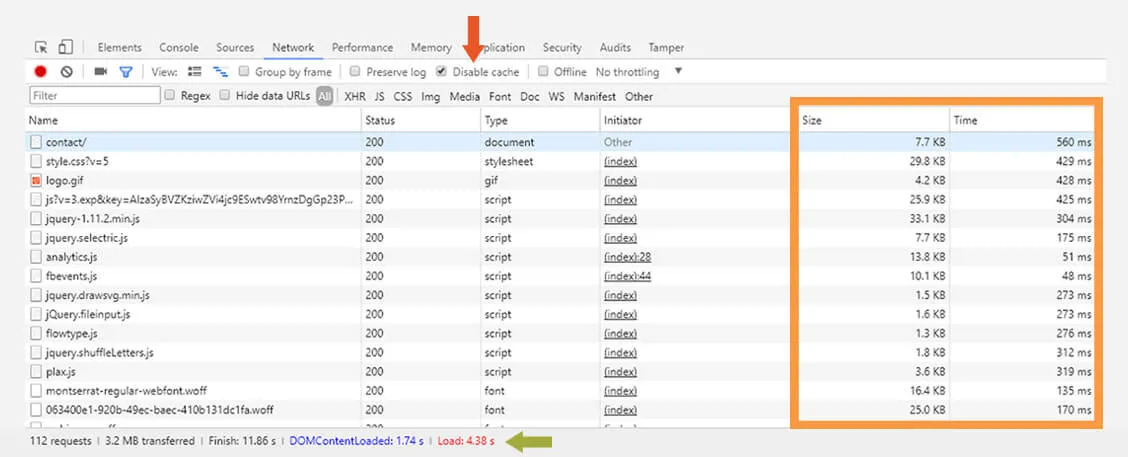

How this works in practice? Let’s take an example. Image 3 shows a portion of resources loaded from the Vega website’s Contact page, in case when cache is turned off. All resources have been obtained from the server and no local cache has been used.

On the right side, we see the time necessary for loading these resources and notice that it takes a bit more than 4 seconds to load the entire page.

Image 3 - Page load when with disabled cache

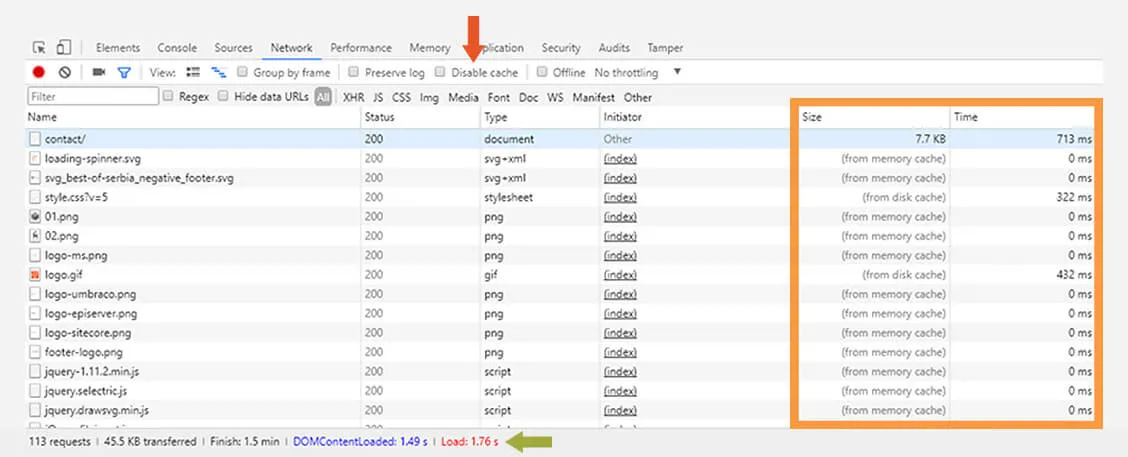

Image 4 shows a list of loaded resources with cache turned on. On the right side you can see that the resources have been loaded either from disk or from memory.

- From disk cache: local or private cache that all browsers have.

- From memory: when a user accesses a page, the browser assumes that his subsequent query will also be on the same page, and loads a portion of resources into memory for quicker response serving on the next user request.

In this case, the loading time of the whole page is decreased now to around 1.5 seconds.

Image 4 - Page load with enabled cache

Shared cache

Shared cache keeps a copy of the resource that can be reused by multiple users. This means that the resource is not unique per user. It is located between the client and the web server, it intercepts all requests to the server and decides whether to serve a copy of the resource from cache or contact the server to obtain such the resource.

As shown on the image below, the first request will reach the server to obtain the resource, while all other requests related to that resource will end up on shared cache that will return the resource copy from cache.

Image 5 - Shared cache

HTTP Cache Headers

Cache configuration is carried out by using HTTP cache header:

- Expires - this is a datetime header, which tells us when a resource becomes obsolete.

- Cache Control - alternative for Expires HTTP header. Cache is configured by using various directives. We will mention some of these directives later.

- ETag - is a unique ID of the resource version. This is a string value, and browsers do not know what this string is, or the format of this string, but it’s usually MD5 hash or checksum obtained from file content. If we want to check whether there is a newer version of the resource on the server, we have to include a conditional header If-None-Match in the request, with the value previously received for ETag within the response. The server will generate a new ETag from the file on the server and compare these two values and depending on them it will return either status 304 (not modified) with empty body if there have been no version changes, or 200 where the response body will contain the content of the resource.

- Last-Modified - contains information when the resource was last time modified. If the request contains an If-Modified-Since or If-Unmodified-Since conditional header then the server will use that value to compare with the latest modified date of the file on the server, and then return either 304 or 200 responses . ETag is more accurate than the Last-Modified header, but it is also slower since it’s being obtained from the file content.

- Vary - stating that different content from cache can be served to different users. In other words, it defines a way how the match with content from cache is done, as cache may vary in relation to input parameters, headers, user-agent etc.

Cache-Control Directives

- no-cache, no-store - we define them when we do not want a response to be cached on the client or server. Both values need to be specified given that certain browsers use one and some use another directive, for example, IE uses no-cache while Firefox uses no-store.

- public - if we define this directive, then we give instructions that a response can be cached anywhere, in the private or shared cache.

- private - while on the other hand, using private we give instructions that a response must not be cached by shared or proxy servers, since it is specific to a single user, and it can be cached only by the private cache.

- immutable - indicates that the response will not change over time

- must-revalidate - gives instructions to cache that status of the stale resource must be verified prior to its use. A resource becomes obsolete upon the expiry of the cache time.

- max-age, s-maxage - represents the maximum time in which a resource will be considered as fresh. It is expressed in seconds and is relative to the moment of making the request. For some resources that are rarely changed, static resources such as images or fonts, max-age is usually set to 1 year. It is generally not recommended for resources to be cached for more than a year. S-maxage is the same as max-age with the only difference being that it refers to shared cache and the value set for this attribute does not affect the local cache.

Configuring HTTP Cache Headers

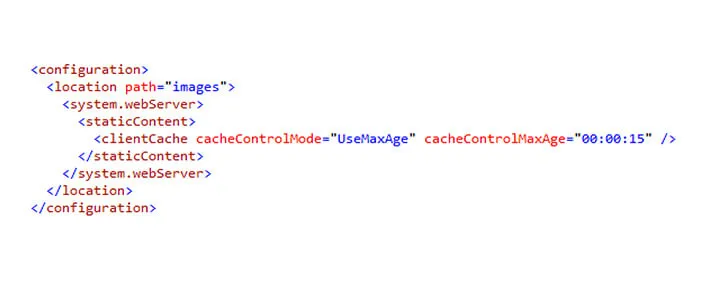

Cache headers can be configured through the web configuration file, as shown in the image, and they can also be configured through IIS on the web server, which will also be reflected by changes in the web.config file. In the particular example shown in the image below, max-age is set to 15 seconds for all files in the “images” folder.

Image 7 - MaxAge configuration in web.config

The second image shows setting of the Expires HTTP header. In case we have set both max-age and Expires, max-age has a higher priority and this value will simply be taken into account by cache.

Image 8 - Expires configuration in web.config

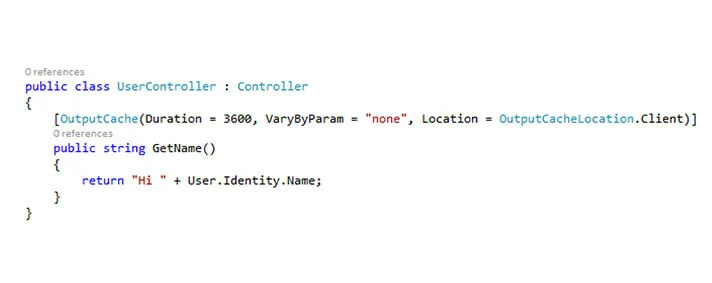

When it is necessary to cache dynamic resources or an output of a certain method, we can use the built-in OutputCache attribute in MVC.

Image 9 - OutputCache attribute available in MVC

The OutputCache attribute can accept the following parameters:

- Duration - which is equivalent to max-age HTTP header

- Vary parameters: VaryByHeader, VaryByParam, VaryByCustom, VaryByContentEncoding - equivalent to Vary HTTP header

- OutputLocation - defines the location where the content can be cached

- Any - same as “cache-control:public”. The resource can be cached anywhere

- Client - the resource will be cached on the client only

- Downstream - the output cache can be stored to any devices other than the origin server. This includes the proxy servers or client that made the request.

- None - the output cache is disabled for the requested resources. It corresponds to “no-cache” header directive.

- Server - the cache is located on the web server where the request was processed

- ServerAndClient - store the resource on server or client, not on the proxy server. Corresponds to combination of "server" and "private" values.

- SqlDependency - specifies a dependency on a SQL database and concrete table in database, e.g. SqlDependency="Northwind:Employees"

- NoStore - corresponds to no-cache and no-store directives.

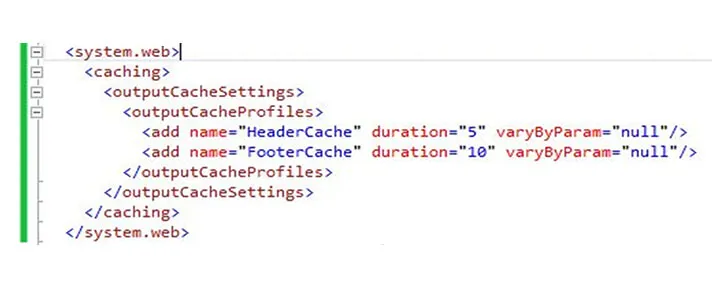

OutputCache can also be configured in the web configuration file, where we can have several defined profiles. The example below shows HeaderCache and FooterCache which we can refer to later in the OutputCache attribute and set the CacheProfile property of this attribute. This type of configuration allows us to define cache profiles once in the web config file and later to reuse them from several locations in the code.

Image 10 - Configuring output cache profiles in web.config file

If we want to cache the entire page in ASP.NET web forms, there is OutputCache directive which we can define in the page header and use the same properties. The value of VaryByParam is set here to “none”, meaning that it does not take into account page parameters such as post values or query string.

Image 11 - Configuring output cache directive

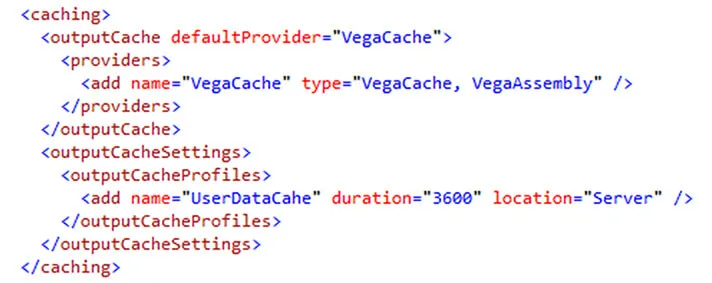

If we set Server for Output Cache Location property, the content will be cached in the server’s internal memory by default. However, this can be a problem if we have a load balancing environment since we don’t want to cache the same resource on each server separately. Therefore we would introduce a kind of a distributed cache, such as MemedCache, Redis or any other, but in order to do that we would have to implement a custom cache provider that would carry out the communication with the distributed cache. The output cache section in the web configuration file allows us to do so, and as shown on the Image 12 we have defined the VegaCache provider from the Vega assembly.

Image 12 - Custom cache provider

CDN - Content Delivery Network

CDN - content delivery network is the best solution for caching both static and dynamic resources. CDN is a distributed server system that delivers resources to end users taking into account their geographic location. When a resource is uploaded to CDN, it’s being automatically distributed to other servers around the world within the CDN network, also known as Edge servers.

Image 13 - Content delivery network

How this work in practice? Let’s take an example, if the server that hosts our website is located in Europe, and the visitor accessing the site is from Australia, all requests made from Australia are then referred to the origin server in Europe. Regardless of how fast the Internet works today, it will take a few seconds to obtain a response from our server. Caching of resources in CDN has been introduced in order to reduce the response time by reading resources from the cache rather than hitting the server every time. This ensures that, when a user from Australia accesses our website, the majority of resources will be served from a local Edge server which is also located in Australia, instead of fetching all those resources from the origin server.

By using CDN, we do not have to implement custom cache provider, as previously mentioned, which would work with distributed cache, given that CDN itself is a distributed server system.

Image 14 - With or without CDN

The Image 14 shows an example of a request with and without CDN. In this particular case, if a request is made from Africa and sent to a server located in America, it will take longer than 3s to receive a response without CDN, while with CDN present the time will be reduced to less than 1s. The request will certainly be made to the server in America, but the majority of resources will be fetched from the local CDN edge server.

CDN recognizes HTTP cache headers and will cache responses from the server as long as specified in the server response. This will prevent CDN from contacting the server at each request. Every call from CDN to the origin server costs money and incorrect configuration may lead to higher costs.

CDN acts as a proxy between client and server, so when we need a resource we will specify a CDN path and it will return a copy of the resource from cache or contact the server to fetch that resource. For example, if we want to fetch the logo.png resource which is available at https://www.vegaitsourcing.rs/images/logo.png we’ll make a request to https://cdn.vegaitsourcing.rs/images/logo.png instead and CDN will do the rest.

Regardless of the fact that all these numbers are small, if we do not have correct caching configured on server, our costs will increase as well, because a website with large traffic may easily incur big expenses.

CDN also provides a cache clearing option called Purge.

Why cache?

- To increase performance

- Reduce delay and traffic

- Websites become more responsive

- Costs are reduced

Keep in mind which resources you want to cache, do not include private resources or resources that can be obtained only if the user is logged onto the website, as that would involve a security risk. Cache resources until they change, and for refreshing use the Purge option provided by CDNs to clear cache on Edge servers.